Keys To Establishing Success In Reservoir Characterization

Uncertainties can be incorporated into the workflow from the static domain to full dynamic reservoir simulation. Central to this has been the Big Loop automated workflow that tightly integrates the static and dynamic domains so they are synchronized throughout the field’s lifetime and so that risks are quantified in the model.

This synchronization takes place at the outset of the workflow, and some of the crucial reservoir characterization stages that occur prior to the dynamic phase can determine the success or failure of the simulation.

Data preparation

One of the drawbacks of many reservoir characterization workflows today is the lack of data integration and data and knowledge and associated uncertainties getting lost, a particular challenge when dealing with multiscale data and multisource information.

Key requirements are that models should be easily updateable through an automated, repeatable workflow; should be fully integrated and consistent with available data; should operate from seismic interpretation through to flow simulation; and should propagate and manage uncertainties throughout the characterization chain.

Another important factor influencing dynamic simulation is the need to have robust and consistent data integration in the 3-D models; for example, the ability to correctly represent wells, including horizontal sections, seismic (including 4-D) and production data.

It also is necessary to have quality control tools and to understand what information needs to come from the data. Questions that should be addressed include

Will the model be a foundation for the complete field life cycle;

Will the model be flexible enough to expand areas of investigation; and

Based on production data, will existing layers be able to be modified and new layers and faults incorporated easily?

Seismic interpretation

The ability to quantify geologic risk early in the interpretation process will have a strong impact on the future accuracy and effectiveness of the model. That is why the seismic interpretation stage and the need to closely link seismic with the geological model and make use of all available data are so important.

Today seismic interpretation is a crucial stage, whereas in the past the seismic stage often was characterized by inherent ambiguity in the data due to limited seismic resolution, constraints on velocity for depth conversion and the issue of scale.

One reason for this new stage is Emerson’s Model Driven interpretation methodology, where users not only create the geological model while conducting seismic interpretation but also capture uncertainty during the interpretation phase. Rather than creating one model with thousands of individual measurements, users can create thousands of models by estimating uncertainty in their interpretations.

In this way uncertainty maps can be used to investigate key risks in the prospect, and other areas can be quickly identified for further study. Interpreters also can create models more representative of the limitations of the data and uncertainty distribution while still respecting the hard data and can condition these models to the seismic data.

Structural modeling

The next stage is to use the uncertainties captured during seismic interpretation to create a robust 3-D structural framework. This includes all the faults in the reservoir, fault-to-fault relations and fault-to-surface relations, as well as the heterogeneities formed as a result of the way rocks are distributed. Fault modeling often is proven to be critical to achieving a correct history match and can have a major impact on dynamic simulation farther down the line.

Within this structural modeling workflow faults are represented as “free point” intersecting surfaces with hierarchical truncation rules, providing a correct geometric representation of fault geometries. Fault surfaces closely honor the input data while users retain detailed control, and the fault surfaces honor well picks and can be truncated by unconformity surfaces.

The fault modeling process also is highly automated, and algorithms are data-driven with input data filtered, edited or smoothed. In some cases, a horizon model can be built as an intermediate step between the fault model and the grid.

Additional modeling functionalities also include the ability to build intrusion objects into the structural modeling process (e.g., to better model salt) along with enhanced seismic resolution on horizons and faults for more accurate interpretation.

This ability to handle thousands of faults, edit the fault relationships and ultimately build the reservoir grid means that all members of the asset team can easily update a model, test different interpretations and use the model for specific applications.

The repeatable workflows and easy updating also overcome any restrictions of having seismic interpretation and depth conversions locked in before any updates. With this workflow updates can take place throughout the process.

Populating the grid

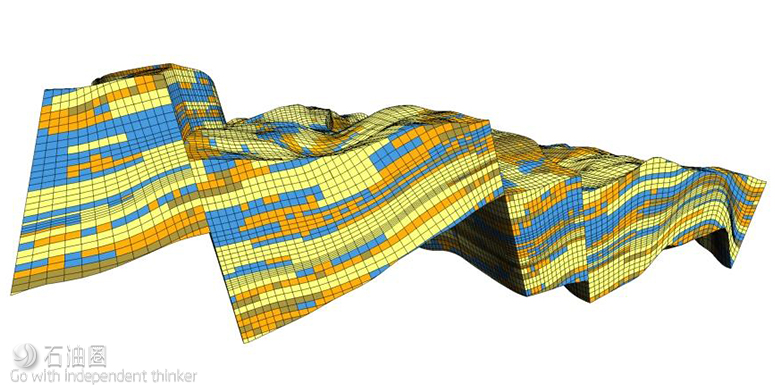

The 3-D grid must be populated with physical parameters such as porosity and permeability to describe the quality of the rock in the reservoir.

The modeling of these facies or rock types in a realistic way while at the same time honoring the observations from a large number of wells requires 3-D advanced facies and petrophysical modeling tools.

Emerson’s reservoir characterization workflow includes both object-based facies modeling tools originated from established geological features and more data-driven model-based tools, all incorporating seismic data information into the models.

The object models will allow data extracted from seismic to be blended with geostatistical tools such as guidelines, trends and variograms to generate well-constrained sedimentary bodies that give a more realistic property model—acknowledging well data, volumetric constraints and the sedimentological environment. The key to a combined workflow is to support the overall characterization goal and have a model that represents the dynamic behavior in the reservoir that is predictive to the future dynamic flow pattern. Nextgeneration object modeling tools will be better at respecting geological features and multiwell data scenarios. The ability to quickly update any model as new data come along is also in line with the Big Loop strategy of easy model updates.

Reservoir simulator links

At this stage it also is essential to facilitate the transition between the static and dynamic domains. One such area is that of time-dependent data such as reservoir parameters (e.g., pressure and flow production data). To this end the workflow includes an events management utility that fully supports these data and facilitates the building and maintenance of simulation-ready flow models.

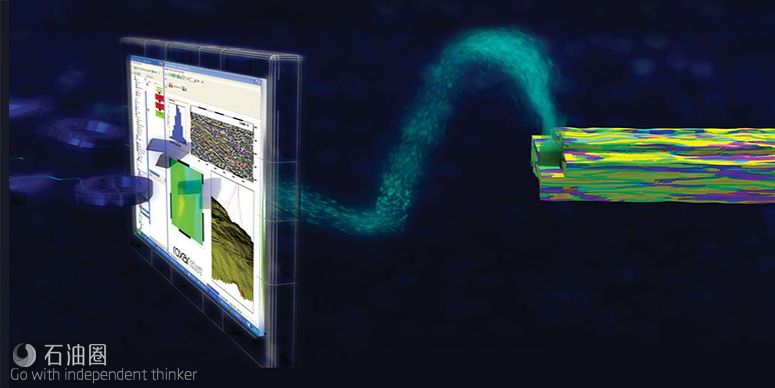

Figure 1 shows a simultaneous visualization of a static model and time-dependent data (based on injector and producer wells and their respective flow rates and perforation intervals) within the workflow. The workflow also can integrate dynamic and static domains by respecting multiple versions of one static model, with stochastic realizations also being taken into account at the history-matching stage through the creation of a “stochastic proxy.”

FIGURE 1. Emerson’s Big Loop workflow tightly integrates static and dynamic domains and ensures a smooth, repeatable and consistent workflow. (Source: Emerson Automation Solutions)

Workflow

The Big Loop workflow remains the cornerstone of the Emerson reservoir characterization process, tightly integrating static and dynamic domains and ensuring a smooth, repeatable and consistent workflow. It is this automated approach, supported by new innovative tools, that ensures the best possible model for the reservoir simulator and better-informed field development decisions.

石油圈

石油圈